AI Isn’t the Problem—- People Pretending to be Experts Are

Over the past year, complaints about “AI slop” have gotten louder across social media, academic spaces, and tech circles. Critics point to hallucinated citations, shallow outputs, and outright nonsense as proof that AI is useless, dangerous, or fundamentally broken. Some of that frustration is understandable. Anyone who has spent real time working with AI knows its limitations surface quickly once you stop treating it like magic.

This was one of the first lessons I learned when I started seriously studying AI. We are nowhere near artificial general intelligence (AGI), and pretending otherwise only sets people up for failure. Today’s models require human judgment, context, and verification to function responsibly. AI can assist, accelerate, and augment—but it cannot replace the critical thinking needed to evaluate whether something is true.

AI is imperfect. Engineers know it. Enthusiasts know it. Ethicists have been pointing this out from the beginning. Large language models (LLMs) were never designed to function as authoritative sources of truth, and treating them as such has always been a misuse of the technology. None of this is new.

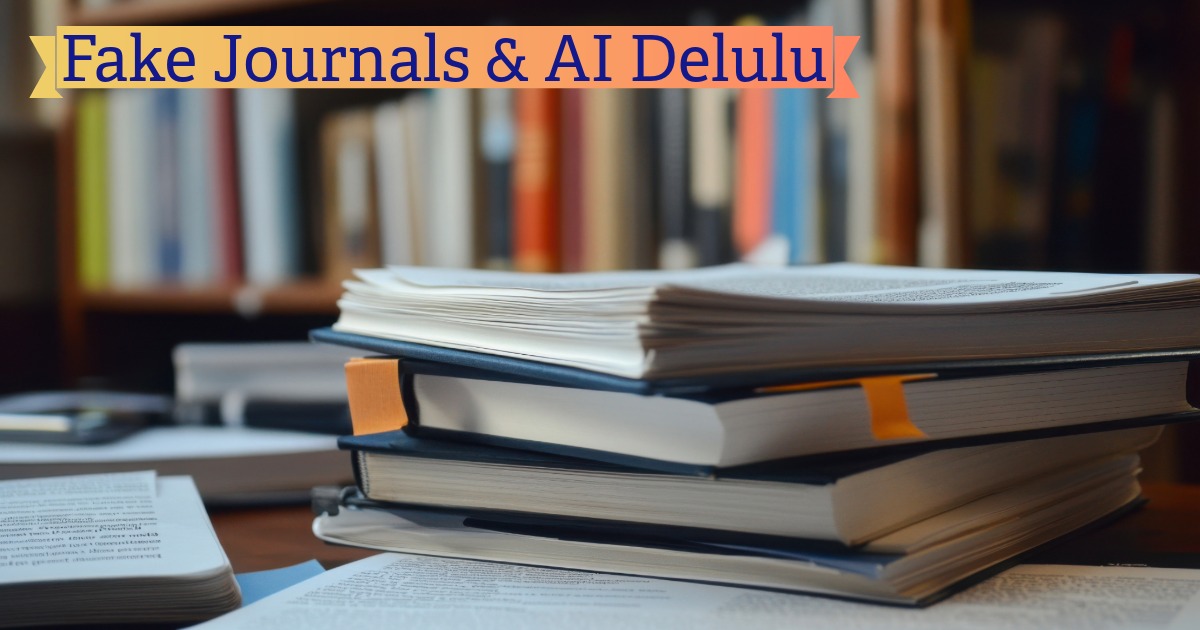

What is new - and far more concerning - is how confidently people misuse AI while presenting themselves as experts. In the rush to publish, impress, or go viral, basic verification gets skipped. Hallucinated citations are waved through. Fake academic journals are treated as legitimate sources. And when the errors inevitably surface, the blame is often shifted onto the tool instead of the humans who failed to use it responsibly.

As someone who has written research papers before, this trend is especially alarming. Academic journals rely on credibility by design. Researchers are trained to spend hours - sometimes days - tracking down reliable sources and documenting every detail: the title, the author, the publication date, and where the work appeared. URLs from established journals, magazines, and reference databases exist so readers can verify claims for themselves. Against that backdrop, citing AI output as a source - effectively saying “ChatGPT said this on X date” - is the academic equivalent of telling readers, “Trust me, bro.” It replaces verifiable scholarship with performative confidence, and that should worry anyone who cares about research integrity.

This is where the conversation around “AI slop” loses the plot. The real problem isn’t that AI makes mistakes - it’s that people stop thinking once AI is involved. When critical thinking, verification, and domain knowledge are replaced with blind trust and unchecked confidence, the result isn’t innovation. It’s sloppy scholarship dressed up as progress.

Imagine you’re a student assigned to write a research paper for a World History class. You’re given broad freedom to choose your topic - maybe the rise of pop music, the reign of Queen Elizabeth II, or a major historical turning point covered in your textbook. As you research, you stumble across an article claiming to reveal a hidden chapter of history - something strange, fascinating, and completely absent from your course materials.

The article looks academic. It cites “research.” It references a journal you’ve never heard of. Maybe it even connects a real artifact - like the Antikythera mechanism - to a bold claim about advanced ancient technology or evidence of extraterrestrial life. It’s intriguing. It feels like a discovery. And without strong background knowledge, it’s easy to mistake novelty for credibility.

This is where critical thinking used to step in. Students were taught to question the source, verify the journal, and cross-check claims against established scholarship. Today, however, AI-generated text and fabricated citations can give fringe ideas the appearance of legitimacy at scale. When fake journals and hallucinated references enter the mix, the line between genuine research and convincing fiction becomes dangerously thin.

My first real exposure to research papers actually goes back even further - to my junior year history class in high school. We were assigned a historical event research paper, and I chose to write about 1950s–1960s pop music and how it laid the groundwork for what would later become R&B and hip-hop in the 1990s. It wasn’t just about music; it was about tracing influence, context, and cultural continuity over time.

That experience stuck with me. It taught me early on that good research isn’t about chasing the most surprising or sensational claim — it’s about understanding how ideas evolve, where they come from, and how they’re supported by credible sources. Even back then, the challenge wasn’t finding information; it was learning how to separate meaningful insight from surface-level noise.

I want to be clear: I’m not claiming to be a scholar of AI law or policy. I’m not. But I have studied Responsible and Ethical AI, earned certifications, and deliberately stay informed through professional communities and ongoing discussions. That perspective has only reinforced one conclusion for me—anyone who plans to rely heavily on AI, whether professionally or personally, needs a baseline understanding of ethical and responsible use.

This shouldn’t be limited to people entering the AI industry. Writers, researchers, students, marketers, educators - anyone using AI to generate or support work that will be shared with others - should treat Responsible AI education as a prerequisite, not an optional add-on. Without it, the outcome is predictable. When tools designed to assist are used without judgment or accountability, the result isn’t insight or efficiency. It’s AI slop - now conveniently formatted as an academic journal draft.

None of this should come as a surprise. Most people already understand AI’s limitations — we’ve seen them play out publicly through distorted AI-generated artwork, buggy and untested AI-written code, and deeply unsettling deepfakes. The same risks apply to written content, including research papers and academic journals. But here’s the part that gets me: we’ve already learned the standard for avoiding this mess since we were schoolchildren. What we learned in school is still relevant in adult life, even when it doesn’t neatly map to our day jobs. You check and re-check your work. You verify your sources. You take critique—peer review included—and apply it before you call something a final draft.

If you skip that because AI “makes it easy,” you’re not just being an AI idgit. You’re being an idgit. Period. If an academic journal is built on AI-generated slop and unchecked citations, it’s closer to speculative fiction than scholarship.

Imagine the backlash - and the collective embarrassment - if widely read or well-regarded academic journals were later revealed to be hoaxes. The damage would ripple through the academic community and beyond, eroding trust in legitimate research and fueling skepticism about AI’s role in knowledge creation. This hypothetical scenario underscores the urgent need for vigilance, transparency, and integrity when integrating AI into scholarly work.